Key Takeaways

- Agentic AI’s Evolution: Autonomous AI agents are transitioning from research prototypes to enterprise production, enhancing decision-making and operational efficiency.

- Tech Stack Significance: A robust tech stack is essential for agents to manage memory, adapt to dynamic environments, and effectively interact with external tools.

- LLMs as the Brain: Large Language Models (LLMs) like GPT-4, Claude, and Gemini provide reasoning, language understanding, and task execution capabilities.

- Agent Orchestration Frameworks: LangChain, CrewAI, and AutoGen define how agents plan, reason, and delegate tasks.

- Memory Management: Memory systems using vector databases like Pinecone or Weaviate enable agents to store and recall contextual information, supporting long-term decision-making.

- Tool & API Integration: Agents complete tasks by leveraging APIs, automation platforms (e.g., UiPath), and internal or external data sources.

- Execution Environment: Depending on scalability, security, and compliance needs, deployment options include serverless services, containers, and on-premises solutions.

- Observability and Monitoring: Tools like LangSmith, OpenTelemetry, and ELK provide insights into agent behavior, performance, and error diagnostics.

- Component Interaction: AI agents operate in continuous loops, interpreting goals, planning actions, executing tools, storing outcomes in memory, and refining subsequent actions.

- Future-Proofing the Stack: Choose modular and interoperable components that allow for easy upgrades, LLM replacements, and seamless tool integrations.

Autonomous, decision-making AI agents are moving from research demos to enterprise production environments. These agents—capable of perceiving context, selecting tools, reasoning, and executing tasks—form the foundation of Agentic AI.

But behind that polished, prompt interface lies a multi-layered tech stack: orchestration frameworks, memory engines, tool APIs, and integration layers that make the magic work.

This blog breaks down the agentic AI stack—explaining the core components, how they interact, and how to design a future-proof, enterprise-grade architecture.

Why Does a Tech Stack Matter for Agents?

Unlike traditional RPA bots or rule-based automation scripts, AI agents operate in dynamic, multi-step environments:

- They need memory, not just instructions.

- They use tools, not just code paths.

- They adapt to context, not just hardcoded flows.

The tech stack enables this by abstracting away complexity; It allows developers to define agents that reason and act, while the stack handles the how.

Core Components of the Agentic AI Stack

Let’s break down the stack from the ground up:

1. Large Language Models (LLMs)

The brain behind most agents.

- Examples: GPT-4, Claude, Gemini, Mistral, LLaMA

- Hosted via APIs or on-prem (for compliance-sensitive enterprises)

- Supports function calling, tool selection, summarization, reasoning

2. Agent Orchestration Frameworks

Define how agents plan, execute, delegate, and reason.

- LangChain – Modular chain-based orchestration, broad community support

- CrewAI – Multi-agent collaboration framework (specialized roles)

- AutoGen (Microsoft) – Conversational multi-agent orchestration

- Haystack (deepset) – Great for knowledge retrieval agents

- Semantic Kernel (Microsoft) – Agent skills + planner integration

3. Memory & Context Management

Allows agents to remember, recall, and persist knowledge across steps or sessions.

- Vector DBs (Pinecone, Weaviate, FAISS, Qdrant)

- Key-Value Stores (Redis for transient memory)

- Document Stores (MongoDB, Elasticsearch for source data)

Used for:

- RAG (Retrieval-Augmented Generation)

- Agent memory

- Context injection (long conversations, historical knowledge)

4. Tool & API Layer

Agents need tools to fetch data, call APIs, and execute scripts.

- REST/GraphQL APIs (internal microservices, external platforms)

- Automation tools (UiPath, Power Automate, Zapier)

- SDK integrations (Slack, Salesforce, JIRA, Databricks, etc.)

- Code interpreters or shell execution (e.g., Python REPLs)

Tools can be exposed to agents via:

- LangChain’s Tool abstraction

- Function calling schema (OpenAI-style)

- Custom toolkits in frameworks like CrewAI

5. Execution & Runtime Environment

Where agents live and run.

- Serverless (AWS Lambda, Azure Functions)

- Containers (Docker, Kubernetes for scalable orchestration)

- Cloud-native runtimes (Vercel, Modal, Anyscale)

- On-prem clusters (for air-gapped or regulated workloads)

You’ll need to ensure the following:

- Concurrency handling for multiple agent sessions

- Latency optimization for real-time interactivity

- Security controls (IAM, role-based access)

6. Observability & Logging

Agent behavior must be tracked and debuggable.

- LangSmith – Trace every agent step, prompt, and output

- OpenTelemetry + ELK/Grafana – Custom enterprise monitoring

- Custom dashboards – For task success, tool usage, LLM cost, etc.

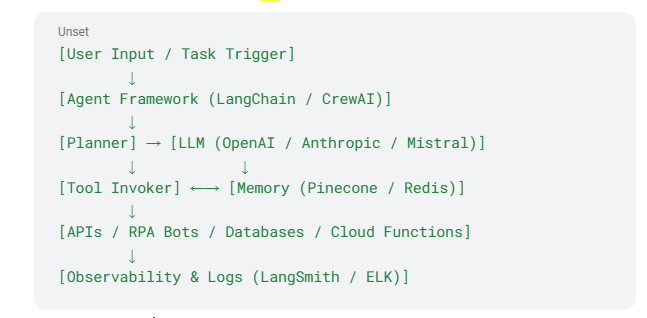

How the Components Interact?

Agents operate in loops:

- Interpret goal

- Plan next action

- Select & call tool

- Store outcome in memory

- Decide what to do next

You can also have multi-agent loops (Planner → Researcher → Coder → Validator).

Recommended Stack Combinations

Quick POC / Internal Pilot

- LLM: OpenAI GPT-4

- Framework: LangChain or CrewAI

- Memory: Pinecone or FAISS

- Tools: REST APIs, Slack, internal Python scripts

- Hosting: Streamlit / FastAPI app on Vercel

- Monitoring: LangSmith

Enterprise-Ready Build

- LLM: Azure OpenAI or on-prem Mistral

- Framework: LangChain + Semantic Kernel

- Memory: Weaviate + Redis hybrid

- Tool Layer: Internal APIs + RPA bots (UiPath)

- Hosting: Docker + Kubernetes (with horizontal scaling)

- Observability: OpenTelemetry + Datadog

Conclusion

Agentic AI requires more than a powerful model—it needs a full stack supporting memory, reasoning, execution, and observability. Choosing the right frameworks, APIs, and hosting environments can differentiate between a flaky prototype and a resilient enterprise-grade deployment.

As the ecosystem evolves, look for modularity and interoperability: the ability to swap LLMs, integrate new tools, and support new workflows without re-architecting from scratch.

The agent is the face, and your tech stack is the brain, muscles, and nervous system. Contact us now for more details.