Key Takeaways

- Evaluating Agentic AI is complex as it requires multidimensional assessment across reasoning accuracy, decision autonomy, and exception handling, unlike traditional automation that relies on more straightforward metrics.

- Core evaluation dimensions include Effectiveness, Efficiency, Autonomy, Accuracy, and Robustness, with advanced metrics like LLM Cost per Task, Hallucination Rate, and Context Utilization Score providing more profound insights.

- Instrumentation is essential for tracking performance. Using tools like OpenTelemetry and Grafana, detailed logging is performed at each agent decision point to capture task success, tool interactions, and LLM reasoning.

- Benchmarking strategies ensure reliability through Synthetic Task Benchmarks that simulate real-world scenarios, Real Task Replays for enterprise-specific performance evaluation, and Human-in-the-Loop Feedback for refining agent behavior.

- Choosing the right tech stack is crucial, with agent frameworks like LangChain or CrewAI, observability tools like Prometheus or Datadog, and SQL/NoSQL databases for task outcome storage.

- Continuous improvement is achieved by integrating feedback into retraining pipelines, ensuring agents align with business goals and consistently meet KPIs.

- Building trust in Agentic AI requires transparent evaluation, clear reporting, and treating agents as evolving decision-makers rather than static automation tools.

As enterprises adopt Agentic AI—autonomous systems capable of planning, reasoning, and acting—there’s growing pressure to measure their value objectively. While large language models (LLMs) are evaluated on benchmarks like MMLU or TruthfulQA, enterprise stakeholders need something different.

How do we measure the real-world performance of agents executing business-critical workflows?

This blog explores how to evaluate Agentic AI in enterprise contexts. We’ll define key KPIs, discuss architectural touchpoints for instrumentation, and provide benchmarking strategies aligned with business outcomes.

Also read: The Tech Stack Behind Agentic AI in the Enterprise: Frameworks, APIs, and Ecosystems

The Problem Space: Why Evaluation Is Hard?

Traditional automation (like RPA) is evaluated using binary metrics—success/failure, time saved, and error reduction. Agentic AI adds cognitive complexity, such as:

- Reasoning accuracy across multi-step tasks

- Goal alignment with dynamic instructions

- Context retention over long conversations

- Tool selection decisions under ambiguity

- Handling exceptions when APIs fail or data is missing

You’re no longer testing “Did the bot click the button?” but “Did the agent make the right decision across seven steps?”

Challenges include:

- Lack of standard metrics for autonomy or reasoning quality

- Black-box behavior from LLMs

- Tool/API errors affecting task success (not always the agent’s fault)

- Evaluating subjective goals (e.g., “Did it summarize well?”)

What to Measure: Core Evaluation Axes?

Here’s a framework for thinking about evaluation across five key dimensions:

| Dimension | KPI | Description |

| Effectiveness | Task Success Rate | % of agent-initiated tasks completed end-to-end correctly |

| Efficiency | Avg Task Duration | Time taken vs baseline automation or manual process |

| Autonomy | Decision Turn Count | # of actions taken without human intervention |

| Accuracy | Tool/Action Selection Accuracy | Did the agent choose the right API/tool at each step? |

| Robustness | Recovery Rate | % of failures recovered through retry, fallback, or clarification |

Optional advanced metrics:

- LLM Cost per Task (tokens consumed × model cost)

- Hallucination Rate (especially in summarization or generation)

- Latency Per Agent Loop (for responsiveness tuning)

- Context Utilization Score (how much memory or past context is used)

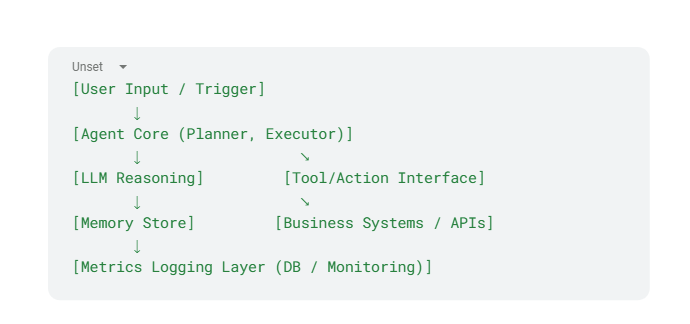

Solution Architecture: Instrumenting the Agent

Your agent platform must be instrumented with observability hooks to track these KPIs.

Instrumentation Points:

- Log every agent step with timestamp, action taken, and tool used

- Log inputs/outputs from LLMs for later replay or audit

- Tag failure types: hallucination, timeout, tool error, misinterpretation

- Track token usage and latency for each reasoning call

- Capture human override rate if there’s fallback to a human-in-the-loop

Technology Stack Consideration

| Layer | Stack Options |

| Agent Framework | LangChain, CrewAI, Autogen |

| Observability | OpenTelemetry, Prometheus, ELK, Datadog |

| Task Outcome Store | SQL/NoSQL DB, Vector DB with feedback tagging |

| Evaluation Pipelines | Custom scripts, LangSmith, HumanEval-style tests |

| Metrics Dashboard | Grafana, Power BI, Streamlit (for exec visibility) |

Benchmarking Approaches

To go beyond anecdotal testing, enterprises need structured benchmarks:

1. Synthetic Task Benchmarks

Create a set of 50–100 simulated prompts across common workflows:

- “Download the latest sales data, clean it, and upload to SharePoint.”

- “Monitor server metrics and open a JIRA ticket if CPU > 80%.”

Evaluate each version of your agent on:

- Task success %

- Token cost

- Latency

- Memory usage

- Action accuracy (compare expected tool vs chosen tool)

2. Real Task Replay

Replay anonymized, historical tickets or workflows to evaluate real-world performance—ideal for finance, IT, and support tasks.

3. Human-in-the-loop Feedback

Collect structured feedback:

- 👍/👎 on agent performance

- Clarification vs failure vs hallucination tags

- Feedback loop integration for agent retraining

Conclusion

As enterprises scale their use of Agentic AI, rigorously evaluating these systems is critical. Don’t treat them as “just chatbots”—they are evolving knowledge workers with decision-making autonomy.

You can turn agent development from art to science and build trust in AI-driven automation by tracking the right metrics- from success rates to reasoning steps. To learn more, get in touch with us today.