How many of you agree that data plays a significant role for businesses that want to expand and experience efficient operations? Whether you are a medium firm or an established one, compromising with data can break your business. This is because data helps companies grow and make decisions they do not regret in the future. From understanding consumer behavior to streamlining processes, data does it all. However, handling vast amounts of data is difficult, especially for cloud-based systems like Azure Synapse Analytics. It brings unexpected challenges like difficulty spotting bottlenecks, maintaining data quality, etc. These problems have existed for years, due to which most companies are lagging. Are you one of them? Think no more, and make the best possible use of generative AI.

Generative AI utilizes its advanced algorithms to work for you. It ensures data accuracy. Not only this, it also automates data and maximizes processes. Other benefits include pipeline configuration changes, anomaly prediction, pattern discovery, etc. It is because of these traits that sets generative AI apart from other conventional automation methods. If you are a company that is seeking more substantial and scalable solutions, generative AI integrated with Azure Synapse Analytics will work best for you.

What are Data Pipelines in Azure Synapse Analytics?

A data pipeline includes a series of processes. These processes help extract data from several sources to change it to meet analytical requirements. Once transformed, it is loaded into a storage or analytical environment. When it comes to Azure Synapse Analytics, data pipelines can handle vast volumes of data, structured and unstructured, services like the following:

- Power BI for visual analytics

- Spark pools for huge data set processing

- Synapse QL for queries

- Azure Data Factory for orchestration.

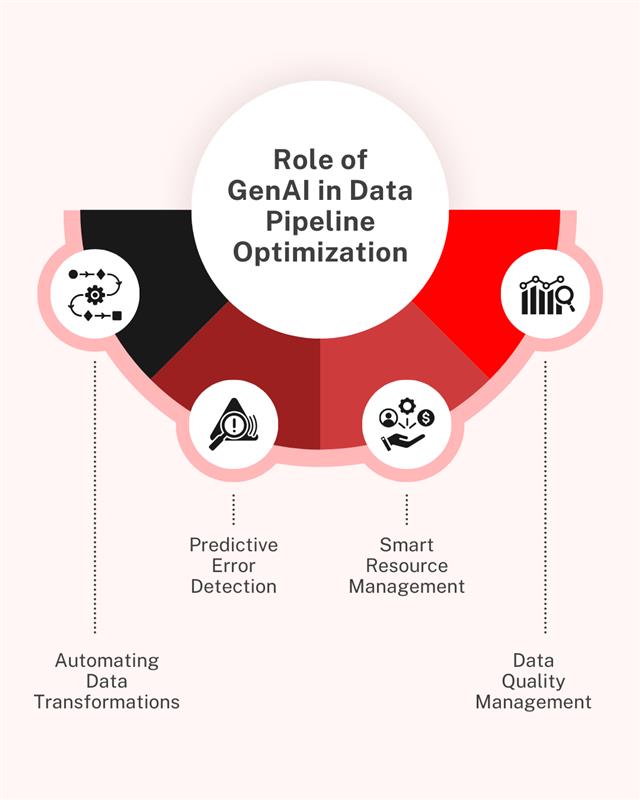

Role of GenAI in Data Pipeline Optimization

Generative AI is undoubtedly transforming how businesses optimize and manage their data pipelines. Using practical machine learning algorithms, generative AI simulates human-like intelligence to make the right decisions. Additionally, it resolves complex problems and automates repetitive tasks. This improves operational efficiency, lessens manual intervention, and ensures that the data obtained is accurate.

Let’s understand in detail how generative AI improves data pipeline management:

Automating Data Transformations

One of the most vital tasks in a data pipeline is data change. This is where raw data gathered from several sources is formatted, structured, and cleaned for suitable analysis. Generative AI automates this by generating optimized transformation logic and analyzing patterns found in the data. Traditional data engineers spend a lot of time writing SQL queries from scratch. Furthermore, they also prepare codes and scripts to conduct these transformations. However, generative AI can understand data schemas, find correlations, and generate scripts in real-time. This way, there is less chance of errors being caused by human intervention.

For example, a generative AI system can identify missing data fields and apply the required changes without manual input.

Predictive Error Detection

Often caused by integration errors, infrastructure problems, and corrupted data, data pipeline failures are a prevalent problem. Generative AI understands these challenges by predictive error detection. By identifying historical performance data, generative AI finds anomalies and recognizes if there are any chances of failure. Moreover, generative AI not only considers errors but also recommends actionable solutions. Whether it is about adjusting processing thresholds or restarting pipeline components, generative AI ensures no interruption in data flow.

Smart Resource Management

Data pipelines operate in different environments, and the workload changes constantly. If insufficient resources are allocated, processing may be delayed, and more funds may be required. This is where generative AI comes in handy. It introduces innovative resource management by monitoring pipeline workload. Through real-time workload analysis, generative AI identifies and manages upcoming resource demands accordingly.

For example, generative AI can allocate extra computational power during peak data ingestion. When activities are low, it can scale down to lessen costs. This way, resources can be used effectively without being wasted.

Data Quality Management

Ensuring high data quality is crucial for accurate decision-making. GenAI enhances data quality management by identifying inconsistencies, anomalies, and missing values. Unlike traditional rule-based methods, which often fail to detect complex errors, GenAI models learn from vast datasets to uncover subtle patterns indicative of data quality issues.

Once an anomaly is detected, GenAI provides intelligent recommendations for resolving the issue. It may suggest reprocessing specific data batches, applying normalization techniques, or enriching data with external sources. Automated error correction enhances data reliability, ensuring that downstream analytics and machine learning models produce accurate insights.

Also, explore Leveraging Generative AI And RPA For Enhanced Productivity And Innovation: The Future Of Work

Key Use Cases of GenAI in Azure Synapse Analytics

Let’s explore some cases of generative AI being used in Azure Synapse Analytics. We have listed some of them below:

1. Automated Data Mapping and Transformation

GenAI can automatically map source data to the target schema, reducing manual coding efforts. It analyzes metadata and past transformation logic to recommend appropriate transformation pipelines.

Example:

- AI detects redundant columns and suggests data normalization.

- It auto-generates SQL code for transformations using natural language prompts.

2. Anomaly Detection and Data Quality Management

AI-powered algorithms can monitor incoming data for inconsistencies or anomalies, ensuring high data quality.

Example:

- GenAI flags outliers in financial transaction data.

- It applies missing value imputation using historical patterns.

3. Performance Optimization

GenAI continuously monitors pipeline performance and identifies optimization opportunities.

Example:

- It suggests partitioning strategies for large datasets.

- It recommends query rewrites for better execution plans.

4. Proactive Failure Prediction and Resolution

Based on historical data, AI models predict potential pipeline failures and provide actionable recommendations.

Example:

- AI predicts storage capacity issues and triggers auto-scaling.

- It generates alerts with resolution steps for database deadlocks.

5. Resource Management

GenAI ensures optimal resource utilization by analyzing workload patterns and adjusting compute resources accordingly.

Example:

- During peak hours, it allocates additional Spark nodes.

- It deallocates unused resources to minimize costs.

Implementing GenAI in Azure Synapse Analytics

Step 1: Data Collection and Preprocessing

Collect data from diverse sources using Azure Data Factory or Event Hubs. Clean and normalize the data using AI-powered data preparation tools.

Step 2: Integrating GenAI Models

- Use Azure Machine Learning to build and train custom GenAI models.

- Deploy models using Azure Kubernetes Service (AKS) or Azure Functions.

- Integrate models with Synapse Pipelines using REST APIs.

Step 3: Monitoring and Optimization

- Enable Azure Monitor to collect performance metrics.

- Use AI-based anomaly detection to track pipeline performance.

- Apply feedback loops for continuous learning and improvement.

Step 4: Automating Decision-Making

Leverage AI-driven decision engines to automate pipeline decisions such as resource allocation, error handling, and schema evolution.

Comparative Analysis: Traditional vs. GenAI-Powered Pipelines

| Feature | Traditional Pipelines | Generative AI-Powered Pipelines |

| Data Transformation | Manual SQL Coding | Automated Code Generation |

| Error Detection | Reactive | Predictive and Proactive |

| Resource Management | Fixed Allocation | Dynamic Adjustment |

| Data Quality Management | Rule-based Checks | AI-Driven Anomaly Detection |

| Optimization | Periodic Manual Tuning | Continuous AI-driven Tuning |

Best Practices for Using GenAI in Data Pipeline Optimization

Are you new to generative AI and have no clue about using it in data pipeline optimization? Follow the below-mentioned steps and get started.

- Define Clear Objectives: Identify optimization goals like reducing latency, improving data quality, or lowering costs.

- Start Small: Implement AI in a subset of pipelines and measure its impact before scaling.

- Ensure Data Governance: Maintain robust data quality standards and compliance measures.

- Enable Explainability: Use interpretable AI models to ensure transparency in decision-making.

- Continuously Monitor and Improve: Implement feedback loops to refine AI algorithms over time.

Also read: The Generative AI Revolution in Healthcare

Conclusion

Integrating generative AI into data pipeline management with Azure Synapse Analytics transforms how enterprises handle data. From predictive error detection to dynamic resource management, GenAI-driven automation enhances operational efficiency, reduces costs, and accelerates insights.

Organizations that want to grow in a competitive industry should adopt this AI-powered model to automate and optimize their data pipelines. This will give them faster, actionable insights and position them for sustained growth in a data-driven world.

Ready to revolutionize your data pipelines? Auxiliobits can help you implement AI-powered data solutions tailored to your enterprise needs. Contact us today to get started!

Let’s Connect

At Auxiliobits, we understand enterprises’ challenges in managing and optimizing data pipelines. With our deep expertise in AI, automation, and cloud solutions, we provide tailored services to integrate Generative AI with Azure Synapse Analytics. Our AI-powered solutions enable seamless data management, predictive error detection, real-time resource optimization, and enhanced data quality.