Key Takeaways

- Ensuring the ethical deployment of autonomous AI agents requires transparency, fairness, and accountability to prevent biases, provide privacy, and uphold trust across critical sectors like healthcare and finance.

- While autonomy enables efficiency, human oversight is essential to prevent AI agents from making unchecked decisions that could lead to harmful or unintended consequences.

- Cyber threats can cause autonomous agents to malfunction or be exploited, making robust security measures and continuous monitoring essential for safe deployment and use.

- Overdependence on AI may erode critical human skills, highlighting the need for balanced integration that supports rather than replaces human expertise.

- AI-driven automation risks workforce displacement and the spread of misinformation, demanding responsible implementation strategies and continuous adaptation by both organizations and society.

Artificial intelligence (AI) is everywhere. It has transformed everything from helping people design Ghibli images to solving their queries. It has grabbed the attention of everyone in the world, and one of its core components has gained popularity, too. This component is called autonomous AI agents, which refers to intelligent systems that can work without relying on anyone. This technology has served its purpose in a plethora of sectors by mimicking human behavior to complete daily tasks.

The deployment of autonomous AI agents introduces a host of ethical dilemmas and operational risks that must be critically examined. One primary problem is whether or not the procedures surrounding any decisions are transparent. Without transparency, there is a high chance that the decisions are influenced and have profound consequences. This issue is pervasive in industries like banking and healthcare. Another problem that cannot be taken lightly is data privacy and security. Because autonomous AI agents depend on vast amounts of data consisting of personal details and company information, it is imperative to make sure that everything is protected. Additionally, it is crucial to ensure that all the personal data is used ethically.

To address such challenges, policymakers and firms must adopt an AI framework that considers privacy, fairness, transparency, collaboration, and accountability between humans and machines. Some best practices include precise ethical guidelines, stakeholder involvement, and regular monitoring to ensure AI behavior aligns with organizational and societal values.

Also read: How Financial Institutions Are Scaling with Automation?

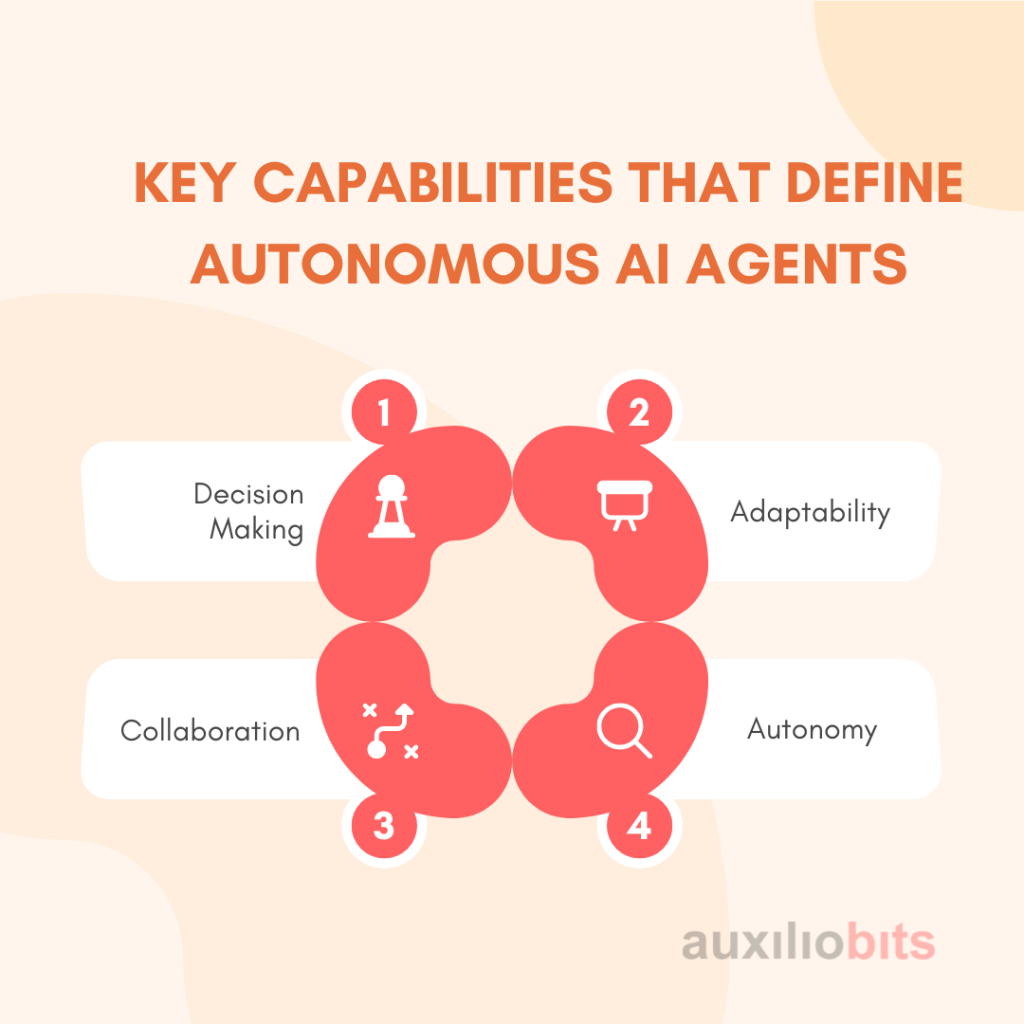

Key Capabilities That Define Autonomous AI Agents

Do you wish to learn about the key capabilities of autonomous AI agents? We have listed them below:

1. Decision-Making

One of the essential benefits of autonomous AI agents is that they don’t depend on anyone to make decisions. They make the most suitable decision with the help of multiple variables and algorithms. For example, an AI agent recognises a customer’s tone, understands the requirements, and provides an appropriate solution on a customer call. One can experience this by making the best use of autonomous AI agents without further delay.

2. Adaptability

Autonomous AI agents are more likely to be adaptable than very rigid systems. They have the skills to improve their behavior, learn from new experiences, and respond to environmental changes. This is one primary reason autonomous AI agents are appropriate for apps in unpredictable domains, including emergency response.

3. Collaboration

Autonomous AI agents are not isolated systems. However, unlike traditional systems, they can communicate smoothly with other systems and humans. They can simplify intricate tasks, partner with networks, and work alongside humans to offer suitable solutions.

4. Autonomy

The most appreciated benefit of an autonomous AI agent is its degree of autonomy. Once programmed with the requirements, these agents can operate exactly like humans. Therefore, the agents are valuable for any task that needs availability throughout the day, speed, and scalability.

Ethical Considerations

Even though AI agents have numerous offers to make, it is essential to ensure that they follow all ethical guidelines. There is no denying that autonomous AI agents can work the best. From handling tasks to being available 24/7, these agents never disappoint anyone. Nevertheless, companies must ensure these agents operate considering moral, legal, and societal frameworks. No firm should compromise the ethical guidelines and should ensure that responsible and fair usage is made.

1. Bias and Discrimination

Two of the most widely discussed ethical issues are bias and discrimination. AI agents learn from vast datasets, many reflecting societal inequalities and historical prejudices. If these biases are not correctly identified and addressed, the AI agent may replicate or amplify them. For example, in recruitment processes, an AI-powered hiring agent might favor candidates of a particular gender or ethnicity based on skewed data, leading to discriminatory practices. This harms individuals and exposes organizations to reputational damage and legal consequences.

2. Privacy Invasion

Another primary concern is privacy invasion. Autonomous agents often require access to large amounts of data to function effectively, including sensitive personal or organizational information. These agents may inadvertently collect or use data without proper consent and strict governance. Consider an AI system that monitors employee behavior to improve productivity—if done without transparency or permission, it could lead to serious breaches of privacy and trust.

3. Accountability

Accountability is another complex challenge. Determining who is responsible becomes murky when an AI agent takes action, leading to unintended consequences. Is it the developer who created the algorithm, the organization that deployed it, or the end user who interacted with it? In high-risk scenarios—like an autonomous vehicle causing a traffic accident—clear lines of accountability must be established to ensure that ethical and legal obligations are met.

4. Transparency

Closely related is the issue of transparency. Many AI systems, especially those powered by deep learning, function as “black boxes.” Their decision-making processes are often opaque, making understanding how conclusions were reached difficult. This lack of clarity becomes particularly dangerous in critical healthcare or criminal justice areas, where AI agents may make life-altering decisions. Stakeholders must see how these systems work, especially when outcomes have significant human impact.

5. Autonomy and Control

Finally, there is the tension between autonomy and control. While autonomy is a defining trait of these agents, it must be balanced with mechanisms that allow human oversight. For instance, an AI agent might suggest a diagnosis or treatment plan in a medical setting. However, if it overrides a healthcare professional’s judgment without checks, it could lead to harmful outcomes. It’s vital to design systems that allow human intervention when necessary, ensuring that AI augments human expertise rather than undermining it.

Risks of Deploying Autonomous AI Agents

As businesses and institutions increasingly integrate autonomous AI agents into their operations, it is essential to recognize that these systems, while powerful, are not without limitations. Autonomous agents bring substantial opportunities and introduce various technical, operational, and societal risks. Ignoring these risks can lead to severe consequences, from financial losses to ethical breaches and public mistrust.

1. Operational Failure

One of the most immediate concerns is operational failure. Like any software-driven system, AI agents are prone to bugs, unexpected input data, or environmental changes not accounted for during training. Because these agents act with a degree of independence, an error in their logic or a misinterpretation of real-time data can lead to critical failures. For instance, a financial services AI agent managing large-scale transactions may encounter glitches and misroute payments, leading to significant financial discrepancies. Unlike traditional systems that require direct user commands, autonomous agents might continue to act incorrectly unless swiftly identified and shut down.

2. Security Threats

Another pressing issue involves security threats. AI agents, especially those connected to the internet or integrated into core enterprise systems, are potential targets for malicious attacks. Hackers could exploit the agent’s design vulnerabilities to manipulate behavior, access restricted data, or hijack the agent for unauthorized tasks. For example, a virtual AI assistant managing innovative office systems could be manipulated to grant external access to internal networks, risking data breaches and compromising sensitive company operations. Ensuring robust cybersecurity protocols for AI agents is paramount to mitigate these threats.

3. Overdependence on AI agents

The overdependence of autonomous AI agents is also a growing concern. As these systems become more capable and widely adopted, there is a risk that human skills in critical domains may deteriorate. In sectors like healthcare, professionals may rely excessively on AI for diagnosis or treatment suggestions, potentially diminishing their diagnostic skills over time. This dependency can create vulnerabilities where AI fails or cannot be used, leaving human operators unprepared to intervene effectively.

4. The deployment of AI agents

The deployment of autonomous agents also brings the social challenge of job displacement. As these agents take over routine, rule-based, and even some cognitive tasks, there is a tangible risk of redundancy in labor-intensive roles. For example, robotic process automation (RPA) agents can efficiently manage data entry, invoicing, and report generation, leading to workforce downsizing in back-office operations. This affects livelihoods and fuels concerns over inequality and the pace of technological change outpacing workforce adaptation.

5. Misinformation

Another emerging risk is misinformation, especially with the rise of generative AI agents. These systems can create text, images, or audio content that appears legitimate but may be factually incorrect or deliberately misleading. An AI chatbot, for instance, could unintentionally spread fake news or biased information, influencing public opinion or even corporate decision-making. The viral nature of digital content amplifies the impact of such misinformation, making its containment difficult once released.

Conclusion

Autonomous AI agents are transforming industries by offering unmatched efficiency, adaptability, and decision-making capabilities. However, their growing influence also brings significant ethical, operational, and societal challenges. From data privacy and bias to accountability, security threats, and job displacement, the risks associated with autonomous AI cannot be overlooked. Organizations and policymakers must adopt a responsible approach that balances innovation with regulation, autonomy with human oversight, and efficiency with ethical safeguards. Only by embedding transparency, fairness, and accountability into the lifecycle of AI agents can we ensure they serve as a force for good—enhancing human potential while minimizing harm.